Next Level Art and the Future of Work and Leisure

Becoming More Creative (and Human) with AI

The fact that AI and deep learning have had a tremendous impact on a vast number of domains and initiated much disruption and progress over the last few years won’t come as a surprise to many of you reading this.

However, what might be surprising to some is that even art and the creative fields, domains that have always been seen as distinctly human, have not been left unaffected by these recent advances.

The fear of AI replacing jobs is one of the most common concerns surrounding these technologies. And now it is even encroaching on our creative pursuits. Does that mean we have to not only worry about our jobs being lost to AI, but also our humanity?

While many either fear that AI will replace or substitute humans, or argue that an AI can never be creative and that anything generated by AI is by definition not art, I want to present an alternative view. I believe that advanced AI will allow us to focus on our unique talents and strengths, provide us with new tools for creative exploration and expression, and allow us to enjoy more high quality leisure time.

Ultimately it will enable us to be more human.

In this article I first want to give you a very brief (and highly incomplete) introduction to the intersection of deep learning and art, and introduce you to a small selection of artists who have adopted neural networks as their preferred medium.

I then want to introduce you to some projects that my current company Qosmo has been working on over the past few years, as well as some of my own personal projects.

Finally, I want to share with you a vision for the future of not only AI and creativity, but more broadly work and humanity.

I hope that by the end of this article I will have convinced you that we should neither fear AI as diminishing or devaluing our humanity, nor dismiss it as something that will only impact routine work but won’t have any influence on our creative capacity. Instead, I hope that by the end you will be excited about the future of AI, and feel ready to embrace it not as a competitor, but as a powerful tool to regain and reinforce our humanity.

Sidenote: This article is based on a series of talks I gave at SciPy Japan 2019 and in an extended version at Nikkei AI Summit 2019. If you prefer to watch a video you can find the SciPy talk on YouTube. But this article is both more recent as well as significantly more in depth, particularly the final section on the future of knowledge work and creativity, which I didn’t have time to touch on at all during the SciPy talk.

A Very Brief History of AI Art

The first time that the connection between AI and creativity has seeped out of rather esoteric circles and into mainstream consciousness was probably when Google announced DeepDream in 2015.

Imagine staring at a cloud. After a little while you get the sense that you can see a pattern there. Maybe a face. The longer you stare at the cloud, and the more you think of the face, the more you actually convince yourself that there is really a face in the cloud staring back at you.

DeepDream is essentially the neural network equivalent of this phenomenon.

By repeatedly reinforcing patterns that a neural network picks up in an image, initially very subtle patterns (or imagined hints thereof) gradually turn into full manifestations of these patterns. In this way, eyes start growing out of dogs, snails appear sprouting out of buildings, and landscapes turn into bizarre cityscapes with fairy tale towers.

Beginning in high school and all the way up to my PhD I occasionally made a little bit of money on the side making music videos for bands. My very first project using deep learning was towards the end of my PhD in 2016, when I used the DeepDream network to achieve a somewhat novel visual effect in the video I did for “The Void” by Letters and Trees.

While no one would get excited about this anymore today, you have to keep in mind that 2016 is prehistoric in deep learning terms. Back then it was a pretty cool effect (at least that’s what I thought), and it was a nice way for me to familiarise myself with TensorFlow (which was still in its infancy at the time) and deep learning in general.

Somewhat more recently, we have moved beyond mere manipulation of existing images. In particular, we have seen astonishing breakthroughs in the generation of images by neural networks. Largely, this has been thanks to a category of networks called GANs, Generative Adversarial Networks.

GANs, which essentially work by pitting two networks against each other, a generator that generates fake data and a discriminator or critic that has to judge whether data is real or a forgery created by the generator network, have achieved a shocking level of accuracy and believability in the kind of images they can produce.

Particularly popular (and bizarre) have been the trippy images of (semi-)real faces morphing into one another, leading to mesmerising videos.

As a result of the level of realness such GAN forgeries have achieved recently, a whole cottage industry of websites such as thispersondoesnotexist.com has sprung up. Each time this particular site is refreshed, a new photorealistic portrait dreamed up by a neural network is generated. While this is fun to play around with, it also shines a spotlight on the growing issue around Deep Fakes and the credibility of real data in an age of near-perfect AI forgeries.

This is a point I will return to again below. While AI art is interesting and enjoyable in its own right, it also — just like other forms of art — allows us to highlight issues and voice our concerns. By using these issues or failure points as the foundation for our work and taking them to their extremes, we as artists can spread awareness and educate.

The best kind of art is not only aesthetically pleasing, but also invites people to think.

In October 2018 AI art really hit the mainstream for the first time when the French collective “Obvious” was able to auction off their GAN generated artwork “Edmond de Belamy” for $432,500 at the renowned auction house Christie’s.

This caused an uproar in both the art and AI communities, and raised the question of whether AI can truly generate art.

However, this was far from the only question it raised, and much of the attention in the aftermath of the auction was on the way Obvious had approached and carried out this project. They faced severe criticism for taking someone else’s code, training it on a simple dataset with questionable results, and selling the generated output printed on canvas.

I do not want to get too deep into the whole debate of whether Obvious deserved the money and attention (there is plenty of that discussion online if you are interested, for example this piece in the great publication Artnome). I do however want to say that in my opinion, independent of whether we consider the final output as art or not, Obvious are not artists in the way I see true artists.

It is very telling that they even signed the piece not with their own name, but with one of the key equations underlying the GAN algorithm. It is as if they want to say “We didn’t do it, the AI made it.”

This goes entirely contrary to what true AI artists are doing.

Like a painter studies his paintbrush and canvas and refines his strokes, like a pianist studies the intricacies of her instrument and practices her technique, so a true AI artist deeply studies the networks he is working with and the ways in which he needs to manipulate them in order to achieve precisely the creative outcomes he has in mind.

In this sense, AI and neural networks are not the creators! They are the pens, the brushes, the cameras, the violins, the chisels, etc. They are tools.

Due to their high complexity and novelty they may seem like magic, like autonomous creators, but in the end they are mere tools in the hands of (hopefully) skilful creators.

To me, what Obvious did is very cleverly use this novelty and high complexity to make a good sale. Whatever you might think of their artistic talent, they are certainly clever entrepreneurs.

It is a bit as if someone made a simple sketch in MS Paint just on the brink of its launch in 1985, printed it on a large canvas, and auctioned that off. The sheer novelty and (at the time) seemingly high complexity of the process that created it might have fetched a high price and impressed people. But it probably wouldn’t have been “good art”. And MS Paint certainly wouldn’t have been the creator.

Just like digital cameras are no more creator than analog cameras, or Photoshop is no more a creator than the pen and paper of a pre-digital graphic designer, so too are AI and deep learning — at least in the way we can realistically imagine them for the foreseeable future — not creators, but tools used by creators.

This might come as a bit of a disappointment to some who are excited about true autonomous creativity. But I am happy about this and think that it in no way diminishes the excitement around these technologies. They allow us creators truly new ways of creative expression. And more than just serve as fixed tools, they are almost a meta-tool that allows us to constantly dream up new tools and processes to achieve our creative visions.

With this rant about what/who I consider real AI artists out of the way, let me briefly introduce you to a handful of people that I think do fall into this category.

This list is in no way exhaustive and the community of AI artists is constantly growing. A good place to start looking into a broader spectrum of AI art is the gallery of the NeurIPS Workshop on Machine Learning for Creativity and Design put together by Luba Elliott.

For the sake of brevity I will also not go too deep into details of any of the artists but encourage you to check out their art (and thoughts) for yourself.

Meet the AI Artists

Probably the most well established AI artist is Mario Klingemann.

Klingemann, just like Obvious, has focused much of his attention on GAN related art, and particularly portraits. However, opposed to Obvious he has truly become a master of their intricacies, knowing exactly how to architect, train, and manipulate them to achieve the precise artistic outcome he desires and envisions.

Many of his works have an incredible subtlety reminiscent of more traditional forms of art, often eerily mixing aesthetics of fine art with more abstract forms of art.

“Neural Glitch”; Image credit: http://www.aiartonline.com/art/mario-klingemann/

What drives his pursuit of AI art is a search for “interestingness” as he outlines in an interview with Art Market Guru.

“I am trying to find interestingness which is a search that never ends since it is in its nature to melt away like a snowflake in your hand once you have captured it. Interestingness hides among the unfamiliar, the uncommon and the uncanny, but once you’ve discovered it and dragged it out into the spotlight the longer you look at it, the more it becomes familiar or normal and eventually loses its interestingness.

I am using machines as detectors to help me in the search through the ever-growing pile of information that is disposed into our world at an accelerating speed. At the same time, I am adding to that pile myself by using machines to generate ordered patterns of information faster than I could do it left to my own devices. Sometimes in this process it is me who decides and sometimes I leave it to the machine.”

This search aspect is a recurring theme within AI art.

We can imagine the abstract space of all possible artworks. This space is unbelievably high-dimensional and vast (potentially infinite depending on what media we consider). Traditionally, artists could only very slowly explore infinitesimal regions in this space.

Neural networks essentially provide tools that allow us to explore this space much faster and with a broader view. And the skill of an AI artist often lies in knowing how to guide this network-enabled exploration towards regions of “high interestingness”.

Klingemann also recently auctioned off one of his artworks, Memories of Passersby, at Sotheby’s. Rather than simply a static artwork, this piece actually included the generation mechanism itself, leading to a fully generative, indefinitely evolving installation.

In the end, the piece fetched a “mere” £32,000, which lead to a widespread media storm with articles titled “AI artwork flops at auction, robot apocalypse not here yet”, proclaiming that the short-lived AI art scene was nothing more than a curiosity and is essentially already at its end.

However, personally I think that this is a good sign for the AI art community (and I think Mario Klingemann agrees with this). £32,000 is a reasonable and very respectable price, and rather than signaling the end of AI art, it signals the end of the AI art hype and the exploitation of the attention/novelty economy.

It is a sign that AI art has established itself as a serious and respectable form of art rather than an overplayed curiosity.

Very different from Mario Klingemann’s work, but no less interesting, is the work by Turkish media artist Memo Akten.

Whereas Klingemann’s work is very “focused” (for lack of a better word) and borders on fine art, Akten’s work is much more conceptual and varied. While his end results may appear less “refined”, they are all based on often very simple but ingenious and provocative ideas.

I highly encourage you to look at his catalogue of work for the sheer variety, but I want to share with your here my favorite piece of his, which he called Learning to See.

The idea is extremely simple, but the results are both stunning and thought provoking.

At the beginning of the training process, neural networks are usually initialized randomly, which means they have absolutely no notions of “the real world”. During training, through repeated exposure to data, they then form an image of the world (or at least the world as represented in the dataset). If this dataset is biased, so will be the worldview of the trained network.

In Learning to See, Akten took this idea to the extreme and trained various neural networks on very distinct datasets of images.

One network only saw images of oceans and shorelines, another network only saw images of fire, and yet another only experienced images of flowers.

As a result, once the networks finished their training process and were released on the “real world”, being shown more generic images, they could only interpret these in terms of what they had learned.

For example the “flower network” didn’t know any better than to interpret everything it saw in terms of flowers, seeing flowers everywhere it looked.

It is as if a child was raised from birth in an isolated environment surrounded by nothing but flowers and then suddenly released into the real world. Its visual cortex and pattern recognition system would probably struggle dramatically to interpret the new patterns and might equally see flowers everywhere.

While this is a thought experiment and mere speculation, it is well known that we humans have natural (and very useful) biases for certain patterns in our visual perception as well, such as a bias to see faces.

Learning to See raises the interesting question of how much of a biased perception each of us might have due to our unique upbringing and cultural background.

Just how different do we all see and perceive the world?

While it is unlikely to be as strong as the bias of Akten’s networks, or the child in the thought experiment, there are almost certainly subtle differences from person to person.

Biases of algorithms have come to general attention in the past. Racist chatbots or sexist image recognition/classification models are only some examples. They are really one of the most fundamental issues of the data driven sciences and deep learning in particular.

In some cases the biases are very obvious. While these cases are certainly shocking and worrying, they are unlikely the most problematic ones, simply because they are so obvious. As data-driven technologies become more pervasive, it will be particularly the subtle but all-present little biases that will be both crucial but also hard to detect and eradicate.

While we might not have a direct solution, as artists we have the power to shine a spotlight on this issue and make it more accessible to the layman (as well as the seasoned practitioner), by taking it to the extreme.

This is where I think a lot of interesting AI art lives, particularly the more conceptual kind that Memo Akten also engages in: Taking well established neural networks, and pushing them towards (or beyond) their breaking point or domain of applicability. Not only does this often lead to interesting and unexpected results, it also gives us deeper insights into the issues such models might create if blindly let loose in real world scenarios.

The last artist I want to introduce here is computational design lecturer Tom White.

His project Perception Engines focuses, as the name suggests, on the role of perception in creativity. In his words,

“Human perception is an often under-appreciated component of the creative process, so it is an interesting exercise to devise a computational creative process that puts perception front and center.”

Again, it is essentially an exercise in tricking neural networks to do things they were initially not intended to do by cleverly changing the domain on which they are applied.

It uses the idea of adversarial examples and puts an interesting artistic twist on it. Particularly, White sets up a feedback loop where the perception of a network guides the creative process, which then in turn again influences the perception.

In a nutshell (and a bit simplified), White took a neural network trained to recognize objects in images, and then used a second system that can generate abstract shapes and search for a result that can “trick” the network into a high certainty prediction of a particular object class. The results are seemingly abstract shapes (which White later turned into real screen-prints) that still convince the networks to be photorealistic representations of certain objects.

What is interesting is that once we know what the network thinks it sees, we can in most cases suddenly also see the objects in most of the images (although I doubt anyone is tricked into confusing it with the real thing).

The true process White used is actually even more clever and deep than the abbreviated outline of the project I gave here. If you are interested in the details I highly recommend checking out his article on the piece.

Qosmo: Computational Creativity and Beyond

Now that you have a little bit of an idea of what AI art can be and gotten to know a few people working in this emerging field, let me briefly give you my own story of how I got involved in this.

I actually started my academic career as a physicist, doing my PhD in Quantum Information Theory. But while doing this I realized that I wanted to do something a bit more applied. And having had some entrepreneurial experience as well through a startup I co-founded, I decided that AI seemed both interesting from a purely academic perspective, but also very promising tool to solve some really cool real-world problems (and make some money).

So after my PhD I worked for a few years at a startup that was applying AI to business problems in a wide range of fields, for example finance and health care. While there are certainly interesting problems to be solved in these domains, I personally got more and more interested in the creative side of AI.

Eventually, in February 2019 I finally decided to quit my previous job and join my friend Nao Tokui at his company Qosmo. If you are interested in the full story of how all this unfolded (and how I also became an author and musician at the same time), I wrote about all this in detail recently.

Qosmo is a small team of creatives based in Tokyo. The core concept of the company is “Computational Creativity”, with a strong focus on AI and music (but certainly not limited to these areas).

Here I want to briefly introduce you to three of our past projects.

AI DJ

Probably Qosmo’s most famous project so far is our AI DJ Project.

Originally started in 2016, AI DJ is a musical dialogue between human and AI.

In DJing, playing “back to back” means that two DJs take turns at selecting and mixing tracks. In our case we have a human playing back to back with an AI.

Specifically, a human (usually Nao) selects a track and mixes it, then the AI takes over and selects a track and mixes it, and so on, creating a natural and continuous cooperative performance.

This idea of augmenting human creativity and playing with the relationship of human and machine creativity is at the heart of what we do at Qosmo. We are not particularly interested in autonomously creative machines (nor do we really believe they are possible in the near future), but rather in how humans can interact with AI and machines for creative purposes.

AI DJ consists of several independent neural networks. At the core are a system that can select a track based on the history of previously played tracks, as well as a system that can do the beat matching and mixing.

Crucially, we are not using digital audio but actual vinyl records. The AI has to learn how to physically manipulate the disc (through a tiny robot arm trained using reinforcement learning) in order to align the beats and get the tempo to match.

While the project is already several years old, we are still constantly developing the system. For example using cameras to analyze crowd behavior and trying to encourage people do dance more by adjusting the track selection to this information.

We have taken this performance to many venues in the past, both local as well as global. Our biggest performance so far was at Google I/O 2019, where we did a one hour show on the main stage warming up the crowd right before CEO Sundar Pichai’s keynote.

You can read more about the details of AI DJ on our website.

Imaginary Soundscapes

As humans we have quite deeply linked visual and auditory experiences. Look at an image of a beach and you can easily imagine the sounds of waves and sea gulls. Looking at a busy intersection might bring car horns and construction noises to mind.

Imaginary Soundscapes is an experiment in giving AI a similar sense of imagined sounds associated with images. It is a web-based sound installation that lets users explore Google Street View while immersing themselves into the imaginary soundscapes dreamed up by the AI.

Technically it is based on the idea of cross-modal information retrieval techniques, such as image-to-audio or text-to-image.

The system was trained with two models on video (i.e. visual and audio) inputs: one well-established, pre-trained image recognition model processes the frames, while another convolutional neural network reads the associated audio as spectrogram images, with a loss that forces the distribution of its output to get as close as possible to that of the first model.

Once trained, the two networks allow us to retrieve the best-matching sound file for a particular scene out of our massive environmental sound dataset.

The generated soundscapes are at times interesting, at times amusing, and at times thought provoking. Many of them match human expectations, while others surprise us. We encourage you to get lost in the imaginary soundscapes yourself.

Neural Beatbox

Our most recent art project is Neural Beatbox, an audio-visual installation which is currently featured at the Barbican in London as part of the exhibition “AI: More Than Human” (which also features works by Mario Klingemann and Memo Akten).

Just like AI DJ this piece is centred around a musical conversation. However, other than in AI DJ, here the AI is not a participant but only the facilitator, and the dialogue takes place between different viewers of the installation.

Rhythm and beats are some of the most fundamental and ancient means of communication among humans. Neural Beatbox enables anyone, no matter their musical background and ability, to create complex beats and rhythms with their own sounds.

As viewers approach the installation they are encouraged to record short clips of themselves, making sounds and pulling funny faces. Using that video, one neural network segments, analyses and classifies a viewer’s sounds into various categories of drum sounds, some of which are then integrated in the currently playing beat.

Simultaneously, another network continuously generates new rhythms.

By combining the contributions of subsequent viewers in this way, an intuitive musical dialogue between people unfolds, resulting in a constantly evolving piece.

The slight imperfections of the AI, such as occasional misclassifications or unusual rhythms actually enhance the creative experience and result in interesting and unique musical experiences. As a viewer, trying to push the system beyond its intended domain by making “non-drum sounds” can lead to really interesting results, some of which are actually surprisingly musical and inspiring.

Currently Neural Beatbox is limited to being displayed in public places like the Barbican exhibition, but we are considering opening it up as an interactive web-based piece as well. We are just a bit concerned what kind of sounds and videos people on the internet might contribute to this installation… While the results could be hilarious and entertaining, they would probably also fairly quickly contain some NSFW content. ;)

Generative Models and VAEs

Besides my (still very recent) work at Qosmo, I have also done a few of my own artworks and more general creativity related projects with AI. Before showing you some of these, I’d briefly like to go into a quick and simple technical excursion.

Many of the models used in the creative scenes fall under the broad category of “Generative Models”. The GANs introduced above are one variant of this.

Generative models are essentially models that, as their name suggest, learn how to generate more or less realistic data. The general idea behind this is very nicely captured in a quote by physicist Richard Feynman.

“What I cannot create, I do not understand.”

— Richard Feynman

As researchers and engineers working with AI, we hope that if we can teach our models to create data that is at least vaguely realistic, these models must have gained some kind of “understanding” of what the real world looks like, or how it behaves.

In other words, we use the ability to create and generate meaningful output as a sign of intelligence.

Unfortunately, this “understanding” or “intelligence” still often looks like the following image.

Image credit: https://sufanon.fandom.com/wiki/File:Pix2pix.png

While our models are definitely learning something about the real world, their domain of knowledge is often severely limited, as we have already seen in the examples of bias above.

In my previous job working on practical business applications this was bad news. You do not want your financial or medical predictions to look like the image above!

Now as an artist however, I find this exciting and inspiring. In fact, as already pointed out, many artists deliberately seek these breaking points or edge cases of generative models.

My personal favourite type of generative model is the class of so called Variational Autoencoders, or VAEs for short. I find them both extremely versatile, as well as beautiful and elegant from an information theoretic perspective.

In short, VAEs are fed original data as an input, then have to compress and transmit this data through an information bottleneck, and finally try to reconstruct it as accurately as possible.

Because of the information bottleneck (more technically: a latent space with much lower dimensionality than the data space), the model can’t just pass on the data directly, but has to learn efficient abstractions and concepts.

For example if we want to apply it to images of dogs and cats, rather than simply transmitting every single pixel value, the model is forced to learn abstractions such as the notion of “dog” and “cat”, the concept of legs and ears, fur color, etc, which allow for a much more compact (although usually not completely lossless) representation of the data.

As a neat side product of this process, we get a compact mathematical description for our data, a so called latent vector or embedding. This allows us to do all sorts of interesting things, such as meaningful data comparisons as well as realistic interpolations between data points as in the example of the GAN faces above.

If you want to learn about all of this in more detail, I wrote an in depth discussion of VAEs from the perspective of a cooperative game played between two players.

Personal Projects

The projects I want to show you in the following all used VAEs in one way or another.

Latent Pulsations

When a VAE is initialised, it’s representation of the data is completely random since it has not yet learned anything about the training data. Then as the training unfolds, the network gradually learns distinct concepts and abstractions, and clusters of similar data start forming in the latent space, which more and more crystallise out as the model converges.

Latent Pulsations visualises one such training process, from initial random chaos, over various stages where the model goes through shifting phases trying out different representations, to finally settling on one which exhibits fairly distinct clustering.

Each point in the latent space here represents one of roughly 300k consumer complaint texts about about 12 different financial products (e.g. “credit card”, “student loan”,…) represented by the different colours.

Besides the natural learning process, I also added some periodic random noise to the embeddings to create a beating pattern that syncs with the track “2 Minds” by InsideInfo, time-stretched from the original 172bpm to 160bpm to better match the video frame rate. I chose the track “2 Minds” because the title reminded me of the encoder-decoder relationship of the VAE.

Usually when creating art with generative models we think of the actual output created by the model. Latent pulsations however flips this notion on its head, showing that latent spaces themselves can have an inherent beauty and artistic quality, even if the data the model was trained on is decidedly dull, such as the consumer complaint texts used in this case.

Latent Landscapes

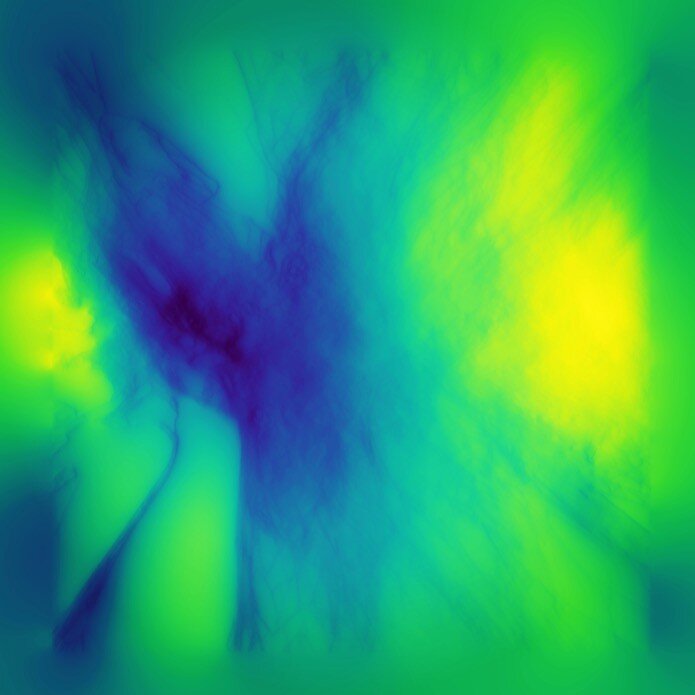

Another related piece that goes even further in terms of visualising the elegance and beauty deeply ingrained in the latent space was Latent Landscapes.

I like to think of these as “brain scans” of neural networks.

More technically, these images are generated by analysing the underlying metric of the latent space (this piece was actually a by-product of a research paper we were working on). Roughly speaking, latent spaces are not “flat”, and distances within them not uniform. Latent Landscapes visualises just how much curvature, how much distance distortion, there is at different locations in the latent space.

The results above, also based on a VAE trained on the same financial complaints dataset, show abstract formations that remind of alien landscapes or cosmic gas clouds.

Just like in Latent Pulsations, the network itself, rather than the output of the generative model, becomes the artwork.

NeuralFunk

The final project I want to mention is my biggest solo project so far. Since I have previously written extensively about this project I only want to give you a very brief outline.

NeuralFunk is an experiment in using deep learning for sound design. It’s an experimental track entirely made from samples that were synthesized by neural networks.

Again, neural networks were not the creators of the track, but they were the sole instruments that were used to compose it. And so the result is not music made by AI, but music made using AI as a tool for exploring new ways of creative expression.

I used two different types of neural networks in the creation of the samples, a VAE trained on spectrograms and a WaveNet (which could additionally be conditioned on spectrogram embeddings from the VAE). Together these networks provided numerous tools for generating new sounds, from reimagining existing samples or combining multiple samples into unique sounds, to dreaming up entirely new sounds completely unconditioned.

The resulting samples were then used to produce the final track.

The title NeuralFunk is inspired by the drum & bass sub-genre Neurofunkwhich was what I initially had in mind. But over the course of the project it turned into something more experimental, matching the experimental nature of the sound design process itself.

If you want the full details of this project (including the code), check out my write up on it.

So what’s next for me?

I have a big vision of an extended live performance using AI which would marry many of the concepts (and projects) introduced above and take them to the next level, while giving me an entirely new means of musical performance.

So far, this is however nothing more than a vision, and given the scale of the project I’m a bit scared of even getting started.

What I am however working on very actively and passionately at the moment is a book about the importance of Time Off.

While this might seem somewhat tangential, it actually in several ways completely ties into the relationship of AI and creativity.

To convince you of this, let’s go on a little excursion. It might seem random and unrelated at first, but bear with me for a bit and hopefully by the end you will agree with me and get excited about this vision of the future.

The Future of Work, Leisure, and Creativity

For most of human history the notion of work was essentially equivalent to manual labor. First on fields and farms, and later in factories.

At the beginning of the 20th century the average factory worker worked for more than ten hours a day, six days a week.

This all changed in 1926, when Henry Ford introduced the eight-hour workday and five-day workweek (while simultaneously significantly raising salary above industry standards).

Why did Ford do this? It was not because he was just a nice guy. He might have been, I’m not sure, but his reasons for doing this were more practical and business-driven.

First of all, he recognized that if he offered better working conditions than anyone else, he could easily attract the best talent. And this is exactly what happened. The most skilled workers left his competitors and lined up to work at his factories. And if someone wasn’t performing, he was simply let go. There were more than enough people willing to take over the position.

Second, he figured that if people have no free time or are too exhausted to use their free time, they won’t spend any money on leisure activities.

“People who have more leisure must have more clothes. They eat a greater variety of food. They require more transportation in vehicles. […] Leisure is an indispensable ingredient in a growing consumer market because working people need to have enough free time to find uses for consumer products, including automobiles.”

It was purely economical.

By giving his workers more leisure (and more money to spend on leisure), the same workers would finally be able and incentivized to buy the very products they were producing. More free time would not harm, but boost the economy!

Finally, and most interestingly for our discussion here, he realized that his workers will simply be able to do a better job on less hours, for two distinct reasons.

The restrictions on time would lead to more innovation and better methods. People would actually think about how to work rather than just grinding things out.

“We can get at least as great production in five days as we can in six, and we shall probably get a greater, for the pressure will bring better methods.”

— Henry Ford

Besides, more rested workers are in general more effective, motivated, and make fewer costly mistakes.

In essence, Ford saw that even for manual labor, equating busyness with productivity only worked up to a certain point.

Around the same time as Ford shortened the working time in his factories, with repercussions that we still feel today, philosopher Bertrand Russel published his wonderful essay “In Praise of Idleness” in 1936.

In this essay Russel noted that historically it was not work, but rather the celebration of leisure that allowed us to accomplish many of the things we now consider the biggest achievements of civilisation.

“In the past there was a small leisure class and a large working class. The leisure class enjoyed advantages for which there was no basis in social justice […] It contributed nearly the whole of what we call civilization. It cultivated the arts and discovered the sciences; it wrote the books, invented the philosophies, and refined social relations. Even the liberation of the oppressed has usually been inaugurated from above. Without the leisure class mankind would never have emerged from barbarism.”

He went on arguing that the way forward is to re-discover our appreciation for leisure and time off (at least the high quality kind of leisure, such as reflection and contemplation, not endlessly scrolling down our Facebook feed).

And to have everyone join the leisure class, not just a select few.

“I want to say, in all seriousness, that a great deal of harm is being done in the modern world by the belief in the virtuousness of work, and that the road to happiness and prosperity lies in an organized diminution of work.” — Bertrand Russel

Given this trend in the early 20th century we should now be living in a culture which, similar to say Ancient Greece and Rome, values leisure highly and sees busyness actually as a form of laziness and lack of time management and deep reflective thought.

Yet the opposite seems to be true. We find ourselves in a culture that all too often wears busyness, stress and overwork as a badge of honor, a sign of accomplishment and pride. Someone who leaves work on time and takes ample breaks during the day can’t possibly be as productive as someone who grinds out long hours of overwork day after day and barely ever leaves their desk, right?

The problem is that even though we have largely shifted from manual labor to knowledge work, workers still suffer from the intellectual equivalent of factory work mentality!

To some extent this may be justified, there currently still remains a large residue of the intellectual equivalent of factory work. This is the kind of work where more time put in actually does largely correlate with more output generated (at least up to a certain extent, as Henry Ford realized).

It’s the kind of work that genuinely justifies long hours and sacrificing time off. But this is also the least valuable kind of work. And this value is further diminishing all the time. Rapidly. These are exactly the kind of tasks that are ripe for disruption, and ultimately replacement, by AI and other productivity and automation tools. Their days are almost numbered.

In my previous job I was leading the development of an AI powered tool that helped financial analysts search through large amounts of news data and generate insights from these texts. With this tool, analysts managed to cut the time it took to search for relevant information and generate certain reports for their managers by up to 90%! That’s 90% less time wasted on a routine task that can now be reinvested in work that actually matters and truly utilizes their skill and creativity.

Or alternatively, it can be invested into time off. And this is a worthwhile investment.

AI will not take away our jobs, nor will it threaten or weaken our human values. My friend and co-author John Fitch and I would argue that the opposite will be true. Yes, AI will disrupt the job landscape, but the kind of jobs that will remain, as well as newly created, will be centered around human skills such as creativity and empathy.

And these skills are highly non-linear with respect to time. More time in does absolutely not correspond to a better or higher output. In fact, it is very easy to put in too much time, to ignore the balancing and nourishing effects of rest, and as a result to diminish one’s output.

In the future of work, time off will not be something that is considered a “nice to have” or an enticing benefit that a generous employer provides to attract and retain talent.

Instead, the deliberate practice of time off will be one of the key skills and competitive advantages. In addition to our work ethic, we should seriously start thinking about our “rest ethic”.

John and I are so excited about this future that we are currently working a book on the topic of Time Off.

We hope to encourage more people to re-discover this ancient art that seems to have been largely forgotten, and give very practical tips for how to cultivate and use high quality leisure, as well as share incredible stories from both history and present times about amazing people who have harnessed the power of time off.

We believe that already, companies and individuals that focus on placing empathy and creativity, and the practices and habits of leisure that support them, at the core of their corporate or personal philosophy will thrive.

And soon, it might be the only viable option.

Busywork is easy to automate, and no one, no matter how many hours they put in and how much of their life they sacrifice, is going to outwork AI on these tasks.

Creativity and empathy on the other hand will remain distinctly human for a long time to come.

Those who understand these skills as well as the new tools will embrace AI not as an obstacle or adversary, but as an enabling technology to take their humanity to the next level.

What will empower them to do so will be a healthy approach to the rhythm of work and leisure, and the deliberate practice of time off.

So we might as well start practicing now!

I hope that this long article got you excited about both AI art itself, as well as the wider implications that AI will have on allowing us to focus more on creativity in the future.

I also hope that I inspired you to take action yourself.

If you are an AI practitioner, hopefully you will play around with your own AI art, maybe starting by pushing your models towards and beyond their limits and see how they behave.

But whether you directly work with AI or not, I really hope that you will consider practicing more, and better, time off.

It’s not slacking off or being lazy. It’s one of the best investments you can make in yourself!

Let’s leave busywork to AI and become more human!